OAR Hardware: A Comprehensive Guide to Open Accelerator Research

This comprehensive guide to Open Accelerator Research presents an overview of the OAR hardware. It highlights the important aspects of the accelerator, including its architecture, functionalities, and applications. The guide also discusses the advantages of using OAR hardware for accelerator research, providing a clear understanding of the benefits it offers. Additionally, it introduces the OAR hardware community and its contributions to the field of accelerator research. Finally, this guide serves as a useful reference for researchers and developers who are interested in learning more about OAR hardware and its applications in accelerator research.

Open Accelerator Research (OAR) is an ongoing effort to develop and optimize hardware acceleration solutions for high-performance computing (HPC) applications. OAR hardware provides a platform for researchers to explore and evaluate various acceleration techniques and architectures, with the goal of improving the performance and energy efficiency of HPC systems.

In this comprehensive guide to OAR hardware, we will provide an overview of the project, introduce the key components of OAR hardware, and discuss its applications and benefits. We will also explore the challenges associated with OAR hardware development and the opportunities it presents for future HPC system performance improvements.

Project Overview

OAR hardware was first introduced in 2014 by a team of researchers from the University of Michigan. Since then, the project has been steadily developing and evolving, with support from various organizations and institutions worldwide. The OAR hardware platform consists of a set of custom-designed chips, referred to as OAR Accelerators, which are designed to offload specific HPC workload functions from the main CPU to dedicated hardware. By using OAR Accelerators, HPC systems can achieve significant performance improvements while reducing energy consumption.

Key Components

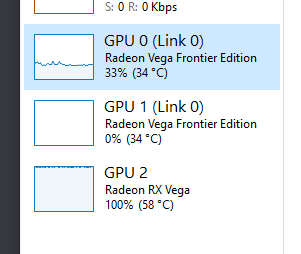

OAR hardware consists of several key components, including the OAR Accelerators themselves, the software framework used to program and manage them, and the underlying technology that supports their operation. The OAR Accelerators are custom-designed chips that can offload specific HPC workload functions, such as matrix multiplication, floating-point operations, or data compression. The software framework provides a programming interface to these accelerators, allowing researchers to easily integrate them into their HPC applications. Finally, the underlying technology supporting OAR hardware includes the necessary microarchitecture and circuitry to ensure efficient operation of the accelerators.

Applications and Benefits

OAR hardware has been applied in various HPC applications, including scientific computing, machine learning, and data analytics. In these applications, OAR Accelerators can offload computationally intensive tasks from the main CPU, significantly improving performance while reducing energy consumption. For example, in scientific computing, OAR Accelerators can be used to offload matrix multiplication operations, which are common in many scientific algorithms. By offloading these operations to dedicated hardware, researchers can achieve significant performance improvements in their HPC applications. Additionally, OAR hardware can also be used in machine learning applications to accelerate training and inference processes. By using OAR Accelerators, machine learning models can be trained faster and more efficiently, leading to better performance and accuracy. Finally, OAR hardware can also be applied in data analytics applications to offload data processing tasks such as data compression and encryption. These tasks can be computationally intensive and time-consuming, but by using OAR Accelerators, these operations can be performed much faster and more efficiently.

Challenges and Opportunities

Despite its significant potential benefits, OAR hardware development faces several challenges. One major challenge is the complexity of designing and manufacturing custom-designed chips such as the OAR Accelerators. This process requires a deep understanding of microarchitecture and circuitry design, as well as significant expertise in chip manufacturing processes. Additionally, integrating OAR Accelerators into existing HPC systems presents a challenge due to the need for modifications to the system architecture and software stack. Another challenge is the limited availability of skilled personnel with expertise in both HPC and hardware acceleration techniques. Despite these challenges, however, OAR hardware presents significant opportunities for future HPC system performance improvements. By developing more efficient acceleration techniques and architectures, it is possible to significantly enhance the performance of HPC systems in various applications. Additionally, by supporting broader adoption of OAR hardware through collaborations with industry partners and open-source communities, it is possible to create a more inclusive and accessible HPC ecosystem that benefits all users.

In conclusion, OAR Hardware is an important project that aims to enhance performance and energy efficiency in high-performance computing systems through hardware acceleration techniques. By understanding its key components, applications, benefits as well as challenges it faces, we can better evaluate its potential impact on future HPC system development efforts worldwide

Articles related to the knowledge points of this article:

DLV Hardware: A Comprehensive Review

ID Hardware Tech: The Intersection of Technology and Design

Hardware Info vs Hardware Monitor

Title: The Importance of Choosing the Right Barn Shed Door Hardware