Revolutionizing AI and Machine Learning: A Comprehensive Guide to Hardware Accelerator Systems

AI and machine learning have been rapidly advancing in recent years, with the development of hardware accelerator systems playing a crucial role in their growth. These systems provide a way to accelerate the processing of large amounts of data, which is essential for training complex algorithms. In this comprehensive guide, we explore the different types of hardware accelerators available today, including GPUs, TPUs, FPGAs, and ASICs. We also discuss the challenges faced by developers in integrating these accelerators into existing systems and provide tips on how to optimize their performance. Additionally, we examine the latest trends in hardware acceleration and look at how they are impacting AI and machine learning applications across industries. Whether you are a developer looking to improve the performance of your system or an industry professional looking to stay ahead of the curve, this guide provides a comprehensive overview of the exciting developments in hardware acceleration for AI and machine learning.

Artificial intelligence (AI) and machine learning (ML) have been driving significant advancements in various industries, from healthcare to finance, transportation, and manufacturing. However, the increasing complexity of these algorithms and the growing demand for faster processing speeds have led to a need for more efficient hardware accelerator systems. This paper aims to provide a comprehensive guide to hardware accelerator systems for artificial intelligence and machine learning, exploring their key components, design principles, and recent advancements in this field.

1. Introduction to Artificial Intelligence and Machine Learning

The first section will introduce readers to the fundamental concepts of AI and ML, including their history, types of algorithms, and applications. This will set the context for the discussion that follows and provide a background understanding of the challenges these technologies face in terms of performance.

2. Overview of Hardware Acceleration for AI and ML

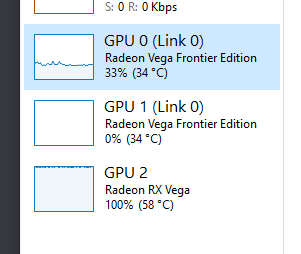

In this section, we will provide an overview of hardware acceleration systems and their role in accelerating AI and ML tasks. The focus will be on how these systems can improve algorithm performance by offloading computations from central processors or graphics processing units (GPUs) to specialized hardware components. We will also discuss the main categories of hardware accelerators used for AI and ML, such as field-programmable gate arrays (FPGAs), application-specific integrated circuits (ASICs), and tensor processors.

3. Key Components of Hardware Acceleration Systems

This section delves into the essential components of hardware accelerator systems and their functions in accelerating AI and ML tasks. We will cover the following aspects:

a. Input/Output (I/O) resources: These are the primary interfaces between the accelerator and the rest of the system, responsible for data transfer between the CPU and the accelerator. Examples include PCIe Gen3, NVLink, and PCIe Gen4.

b. Memory subsystem: This component provides temporary storage for intermediate results generated during the training process. It can take different forms, such as GPU registers, on-chip memory, or off-chip memory attached via high-speed interconnects like HBM2 or GDDR6.

c. Compute units: These are specialized processing elements designed to execute specific arithmetic or logic operations. In hardware accelerator systems for AI and ML, compute units can be implemented using various techniques, such as digital signal processing (DSP), field-programmable gate arrays (FPGAs), application-specific integrated circuits (ASICs), or neuromorphic architectures.

d. Control unit(s): This component manages the flow of instructions between the different processing elements in the hardware accelerator. It may also include features such as dynamic reconfiguration, load balancing, and power management.

4. Design Principles of Hardware Acceleration Systems

This section discusses some of the fundamental design principles that guide the development of hardware accelerator systems for AI and ML. Key topics include:

a. Scalability: Hardware accelerator systems must be able to scale up or down depending on the requirements of the application, while maintaining consistent performance levels across different workloads.

b. Energy efficiency: As energy consumption becomes increasingly critical, hardware accelerator systems must strive for high levels of energy efficiency by optimizing resource utilization, reducing power consumption, and utilizing idle times effectively.

c. Interoperability: Hardware accelerator systems should support a wide range of algorithms and frameworks, enabling seamless integration with existing software stacks and toolsets.

d. Cost-effectiveness: The cost of developing and deploying hardware accelerator systems should be reasonable relative to their performance benefits, making them accessible to a broad range of organizations and industries.

5. Recent Advancements in Hardware Acceleration for AI and ML

This section explores some of the recent advancements in hardware accelerator technology for AI and ML, focusing on areas such as:

a. Deep learning inference acceleration: Techniques like neural network compression, quantization, pruning, and model distillation can help reduce the computational requirements for inference without compromising accuracy.

b. Hybrid computing: Hybrid architectures that combine multiple computing elements, such as CPUs, FPGAs, ASICs, and GPUs, can provide significant performance gains by leveraging their unique strengths and mitigating their weaknesses.

c. Neuromorphic computing: Neuromorphic architectures inspired by biological neural networks can enable highly parallelized computation, low power consumption

Articles related to the knowledge points of this article:

Title: The Evolution and Implications of Hardware Device Code 45

Title: Wiebes Home Hardware: A Comprehensive Guide to Quality and Innovation in Home Improvement

LOUISA HARDWARE: QUALITY, FUNCTIONALITY, AND BEAUTY IN ONE

Title: Exploring the Vast World of Electronics at Easleys SC Hardware Store

Commercial Hardware: The Backbone of Modern Business

Title: Warm Brass Cabinet Hardware: A Timeless Touch of Elegance