The True Value of Hardware in PyTorch

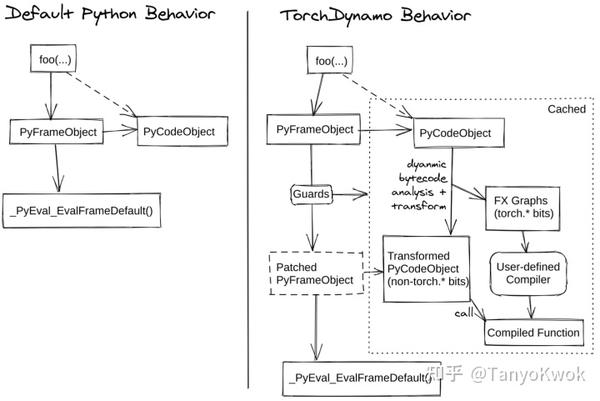

PyTorch, a popular deep learning framework, has recently introduced support for hardware acceleration. This new feature enables users to leverage the computational power of GPUs, TPUs, and other hardware devices to train and evaluate their neural networks more efficiently. By utilizing hardware acceleration, researchers and practitioners can reduce the time it takes to train models, improve accuracy, and gain deeper insights into their data. This development marks a significant milestone in the evolution of deep learning, as it democratizes access to high-performance computing resources and makes state-of-the-art techniques more accessible to a wider audience. In the future, we can expect to see even more advancements in hardware support for deep learning frameworks, leading to even greater computational efficiency and performance gains.

PyTorch, an open-source machine learning framework, has become increasingly popular for its flexibility and efficiency. From a simple neural network to complex deep learning models, PyTorch provides a range of tools and features to help researchers and developers alike. However, as with any technology, there are trade-offs and decisions that need to be made to ensure optimal performance. One such decision is the choice of hardware on which to run the PyTorch code.

In this article, we explore the true value of hardware in PyTorch. We'll discuss how hardware choices affect the performance of your code, the role of hardware acceleration in speeding up training and inference, and how to choose the right hardware for your specific needs. By the end of this article, you'll have a better understanding of how hardware plays a crucial role in the world of PyTorch.

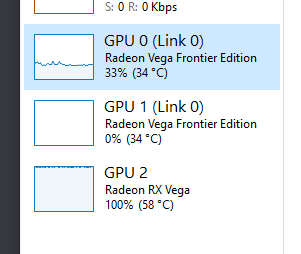

Hardware choices in PyTorch can be broadly divided into two categories: CPUs and GPUs. CPUs, or Central Processing Units, are the traditional processors found in most computers. They handle the majority of computational tasks and provide a stable and reliable platform for running PyTorch code. However, CPUs have some limitations when it comes to processing large amounts of data or performing complex calculations quickly. This is where GPUs, or Graphics Processing Units, come into play.

GPUs were originally designed to render graphics quickly on computer screens but have since evolved to become much more powerful processors. They are particularly well-suited for parallel processing tasks, which are common in machine learning algorithms. By using GPUs, you can significantly speed up the training and inference of your PyTorch models. This is because GPUs have a much higher computational throughput than CPUs, meaning they can process data much faster.

However, choosing the right hardware for your specific needs is not always straightforward. Factors such as your budget, the size of your dataset, and the complexity of your model will all affect your hardware decisions. For example, if you have a limited budget, you may need to settle for a less powerful GPU or even use a CPU. Conversely, if you have a large dataset or a complex model, you may need to invest in a more powerful GPU to ensure optimal performance.

Another consideration is the role of hardware acceleration in PyTorch. Hardware acceleration refers to using specialized hardware to speed up the execution of specific tasks. In the case of machine learning, hardware acceleration can take many forms, such as using FPGAs (Field-Programmable Gate Arrays) or TPUs (Tensor Processing Units). These types of hardware are designed to optimize specific operations, such as matrix multiplication or tensor processing, which are common in machine learning algorithms. By using these types of hardware, you can further enhance the performance of your PyTorch code.

In conclusion, the true value of hardware in PyTorch lies in its ability to significantly affect the performance of your code. By making wise decisions about your hardware choices and taking advantage of hardware acceleration techniques, you can ensure that your PyTorch models run as efficiently as possible. From CPUs to GPUs and even specialized hardware like FPGAs and TPUs, each type of hardware has its own unique strengths and weaknesses that need to be considered when building machine learning applications with PyTorch.

Articles related to the knowledge points of this article:

HARDWARE HANK VERSUS ACE HARDWARE: A COMPARATIVE ANALYSIS

Dock Hardware: The Key to Efficient Docking

Specialty Hardware: Key to Successful Product Design and Manufacturing

Linnea Hardware: A Comprehensive Review

Alzassbg Hardware: A Comprehensive Guide to the Best PC Hardware for Gaming, Work, and Entertainment